오늘은 '리벨리온 AI 반도체가 국제 대회에서 엔비디아와 퀄컴 제치다!'에 대해서 소개해보려고 합니다..

벤치마크란 객관적으로 정의된 워크로드(작업량)을 통해 프로세서가 해당 작업을 얼마나 효율적으로 수행할 수 있는지 확인하는 과정입니다.

MLPerf는 대표적인 NPU(신경망처리장치) 벤치마크입니다. 최근 마이크로소프트가 개발한 챗GPT가 큰 인기를 끌면서 5일 만에 이용자 수 100만명을 돌파하는 유례없는 대중성을 확보했는데요. 이 챗GPT는 초거대AI 모델(대용량 데이터를 스스로 학습해 인간처럼 종합적 추론이 가능한 차세대 AI)이기에 기본적으로 연산량이 방대합니다. 이 말은 곧 데이터 처리 비용(소모전력), 데이터센터 구축 및 운영 비용 등으로 연결되는데 챗GPT를 만든 마이크로소프트 그리고 다른 초거대AI 모델을 구상하는 사업자의 입장에서는 비용 문제 해결이 필수적인 상황입니다. 실제로 MS도 비용절감을 위해 최근 리벨리온과 같은 AI반도체 스타트업에게 반도체 샘플을 요구하는 콜드 메일(Cold mail, 일반적으로 협업 제안에 사용됨)을 보내고 있다고 합니다. MS와 같은 대형 테크 기업에서 스타트업에게 콜드 메일을 보내는 일은 이례적인데요, 그만큼 챗GPT의 비용 절감과 운영 효율화가 시급한 상황으로 예상됩니다. 현재 챗GPT와 같은 AI플랫폼에는 범용 GPU가 사용되는데 GPU는 기본적으로 영상처리, 3D렌더링과 모델링 등에 사용되는 범용 장치로서 결국 비용 절감을 위해서는 AI 전용 하드웨어가 필요한데, 리벨리온의 '아톰(ATOM)'이 AI 전용 반도체입니다.

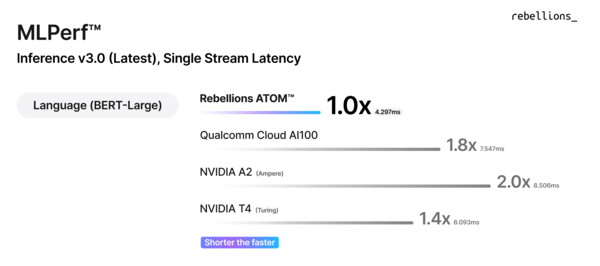

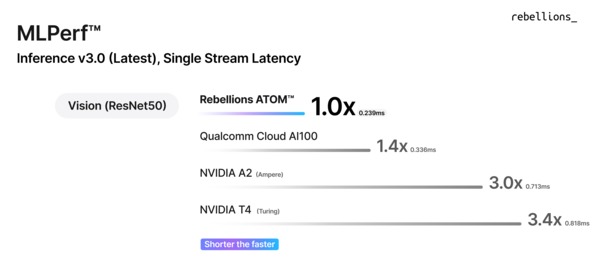

리벨리온은 지난 2월 개발한 AI반도체 '아톰'으로 이번 성능 테스트를 진행했습니다. 테스트는 벤치마크에서 대표적으로 사용되는 언어모델인 BERT-Large(이하 BERT)와 비전모델 ResNet50로 진행됐습니다.

그 결과 아톰은 BERT 부문에서 퀄컴의 최신 AI반도체(클라우드AI100), 엔디비아의 동급 GPU(그래픽처리장치) A2,T4 대비 1.5~2배 앞서는 처리속도를 보였습니다. ResNet50 부문 싱글스트림 처리속도(0.239ms)에서는 퀄컴 대비 1.4배, 엔비디아 대비 3배 이상의 속도를 입증했습니다.

싱글스트림 테스트는 1개의 단일 데ㅣ이터를 처리할 때의 지연속도를 비교하는데 데이터 처리 속도는 칩의 크기에 따라 상이합니다. 1개의 단일 데이터 처리 속도를 비교하면 비교적 동등한 조건에서 반도체의 성능을 평가할 수 있습니다.

즉, 리벨리온의 아톰이 동등한 조건에서 높은 처리속도를 보였다는 말은 설계 측면에서 우수성을 인정받았다는 의미입니다.

박성현 리벨리온 대표는 "칩의 크기나 공정에 큰 영향을 받지 않는 싱글스트림 지연시간이 코어 아키텍처의 우수성을 가장 잘 드러내는 지표"라며 "리벨리온은 언어모델과 비전모델 모두 싱글스트림 결과를 제출해 글로벌 경쟁력을 입증한 것이 가장 큰 차별점"이라고 강조했습니다.

리벨리온에 따르면 아톰의 전력효율이 GPU 대비 비전모델의 경우 10배, 언어모델 3~4배 높습니다.이는 아톰이 AI플랫폼에 상용화된다면 전력 소모량을 획기적으로 줄일 수 있다는 의미입니다. 또 고객들이 기존의 GPU를 사용할 때와 유사한 환경에서 AI서비스가 가능하도록 아톰에 최적화된 컴파일러, 펌웨어, 드라이버 등 소프트웨어도 자체 개발중이라고 합니다.

박 대표는 "대한민국에서도 GPT같은 트랜스포머를 지원할 수 있는 AI반도체가 출시됐다는 의의가 크다"며 "언어모델 뿐 아니라 요즘은 고성능 비전 모델들도 트랜스포머를 사용하기 때문에, AI반도체를 활용한 고성능 서비스를 위해서는 아톰이 국내 유일의 대안이 될 것"이라고 말했습니다.

한편 아톰은 삼성전자의 5나노 파운드리를 통해 2024년 1분기 양산 예정이며, 리벨리온은 KT가 올해 중 출시할 초거대 AI서비스 '믿음' 경량화 모델에 아톰을 적용할 예정이라고 합니다.

Today, I would like to introduce 'Ribelions' AI Semiconductor beats NVIDIA and Qualcomm' at the international competition'.

Benchmarking is the process of determining how efficiently a processor can perform its tasks through objectively defined workloads. MLPerf is a representative NPU (Neural Network Processing Unit) benchmark. Recently, Chat GPT developed by Microsoft has become very popular, securing an unprecedented popularity that surpassed 1 million users in five days. This Chat GPT is a super-large AI model (the next generation AI that can learn large amounts of data on its own and make comprehensive inference like humans), so it basically has a huge amount of computation. This leads to data processing costs (power consumption), data center construction and operational costs, and for Microsoft, which created ChatGPT, and operators who envision other mega-AI models, it is essential to solve the cost problem. In fact, Microsoft is also recently sending cold mail (Coldmail, commonly used in collaborative proposals) to AI semiconductor startups such as Ribelions to reduce costs. It is unusual for large tech companies such as Microsoft to send cold mail to startups, and it is expected that Chat GPT will be in urgent need of cost reduction and operational efficiency.

Currently, general-purpose GPUs are used in AI platforms such as Chat GPT, and GPUs are basically general-purpose devices used for image processing, 3D rendering, and modeling, and AI-only hardware is needed to reduce costs.

Ribelions conducted this performance test with AI semiconductor 'ATOM' developed in February. The test was conducted with BERT-Large (hereinafter referred to as BERT), a language model typically used in benchmarks, and a vision model ResNet50. As a result, Atom showed 1.5 to 2 times faster processing speed in the BERT sector than Qualcomm's latest AI semiconductor (Cloud AI100), Ndivia's equivalent GPU (Graphics Processing Unit) A2 and T4. In the ResNet50 segment single stream processing speed (0.239 ms), we demonstrated 1.4 times faster than Qualcomm and more than 3 times faster than Nvidia.

A single-stream test compares the delay rate when processing one single data, which varies depending on the size of the chip. A comparison of one single data processing rate allows you to evaluate the performance of a semiconductor under relatively equal conditions.

This means that the high processing speed of the Revelions' ATOM under equal conditions means that it has been recognized for its excellence in design.

"The single-stream latency, which is not greatly affected by the size or process of the chip, is the best indicator of the excellence of the core architecture," said Park Sung-hyun, CEO of Ribelions.

According to Ribelions, the power efficiency of ATOM is 10 times higher than that of GPU and 3 to 4 times higher than that of language models.This means that if ATOM is commercialized on the AI platform, it can drastically reduce power consumption. It is also said that it is developing its own software such as compilers, firmware, and drivers optimized for ATOM so that customers can provide AI services in an environment similar to that of existing GPUs.

CEO Park said, "It is significant that AI semiconductors that can support Transformers such as GPT have been released in Korea," adding, "Since not only language models but also high-performance vision models use Transformers these days, ATOM will be the only alternative in Korea."

Meanwhile, ATOM will be mass-produced in the first quarter of 2024 through Samsung Electronics' 5-nano foundry, and Revelion will apply ATOM to KT's ultra-large AI service "Trust" lightweight model to be released this year.

'IT&Tech' 카테고리의 다른 글

| Samsung plans to replace 'Google' with Microsoft's 'Bing' as the search engine for the Galaxy. ; 갤럭시 검색 엔진 '구글' 대신 마이크로소프트 '빙'으로 대체 (0) | 2023.05.03 |

|---|---|

| Dot AI craze (0) | 2023.05.02 |

| AI ; Machine Learning & Deep Learning (0) | 2023.05.01 |

| 가상현실 기술의 교육적 활용 방법 (0) | 2023.04.28 |

| 알리바바의 생성형 AI 통이치엔원 ; Alibaba rolls out ChatGPT rival, Tongyi Qianwen (2) | 2023.04.27 |